I hear from @arbitrage that a number of the users on top of the leaderboard have high feature exposure to just one feature or may in fact simply be setting their entire submission to be one feature like feature_intelligence1.

It’s tempting to say this is crazy and will result in big burns but is it possible that feature timing can be achieved and done well and reliably and that people staking with lots of exposure to one feature are not crazy?

One potential way to argue that feature timing doesn’t work is if features move in a random way. As in a feature is just as likely to be >0 correlation with the target as it is to be <0 correlation regardless of the recent history of the feature… i.e. the feature performance is memoryless: it’s like betting on black or red in roulette… 7 reds in a row doesn’t make black more likely (or red more likely for that matter).

So if you look at the feature scores per era for every era, do their scores have the statistical property that they look like roulette or do they appear to have non-random “runs” or “regimes” which can’t be explained by chance?

If they don’t behave randomly, are they model-able and can we say things like “now is a good time to have a little be extra feature exposure to feature_intelligence1”.

One test I like for randomness is the Wald–Wolfowitz runs test - Wikipedia but I lost my Python code of it.

Another easier one is auto-correlation. If you score every feature vs the target by itself and then compute the autocorrelation, you find that some features have large positive or negative autocorrelation. For example, “feature_wisdom27” has 0.24 autocorrelation. Positive autocorrelation means if it’s had good performance lately it will tend to continue to have good performance (long runs). Negative autocorrelation means it’s mean reverting.

If feature_wisdom27 has had >0 performance with the target four eras in a row, how much would you stake that it will have a fifth >0 performance next? How could you build a model to give you good probability estimates of what will happen next so you can use those to inform your stakes?

TARGET_NAME = f"target"

PREDICTION_NAME = f"feature_intelligence1"

# Submissions are scored by spearman correlation

def correlation(predictions, targets):

ranked_preds = predictions.rank(pct=True, method="first")

return np.corrcoef(ranked_preds, targets)[0, 1]

# convenience method for scoring

def score(df):

return correlation(df[PREDICTION_NAME], df[TARGET_NAME])

data["eraNum"] = data["era"].apply(lambda x: int(x[3:]))

feature_names = [

f for f in data.columns if f.startswith("feature")

]

def ar1(x):

return np.corrcoef(x[:-1], x[1:])[0,1]

for f in feature_names:

feature_per_era_corrs = data.groupby('eraNum').apply(lambda d: correlation((d["target"]), (d[f])))

print(f)

print(ar1((feature_per_era_corrs>np.mean(feature_per_era_corrs))*1))

feature_wisdom27_per_era_corrs = data.groupby('eraNum').apply(lambda d: correlation((d["target"]), (d["feature_wisdom27"])))

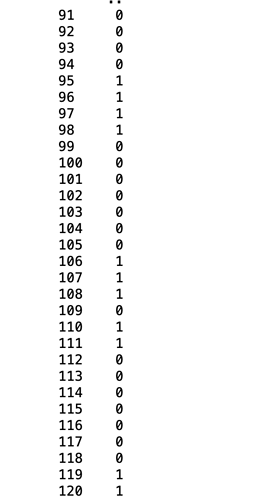

(feature_wisdom27_per_era_corrs>np.mean(feature_wisdom27_per_era_corrs))*1

feature_wisdown27 runs: