This part is in fact data leakage and could lead to such much overestimated performance. In live you will not be able to perform early stopping on the live data, but that is essentially what you are doing here

Anyway as @wigglemuse , as active member of the forum/chat (which I read probably the whole  ) are skeptical I will look into it. I could make a mistake, which is quite possible, but at this moment I don’t see where. I used/tried a lot of tricks mentioned here by the community but also couple own techniques as well. For sure I want to be far from “cheating”.

) are skeptical I will look into it. I could make a mistake, which is quite possible, but at this moment I don’t see where. I used/tried a lot of tricks mentioned here by the community but also couple own techniques as well. For sure I want to be far from “cheating”.

let me add results with doing early stoping on “unused”/untouched training data. I cut some portion of continous eras.

mean std sharpe max_drawdown apy

max_drawdown 0.046483 0.023462 1.981191 -0.007393 815.262085

As expected this part has even better results in term of corr. Of course model lost some of the signals from cut part.

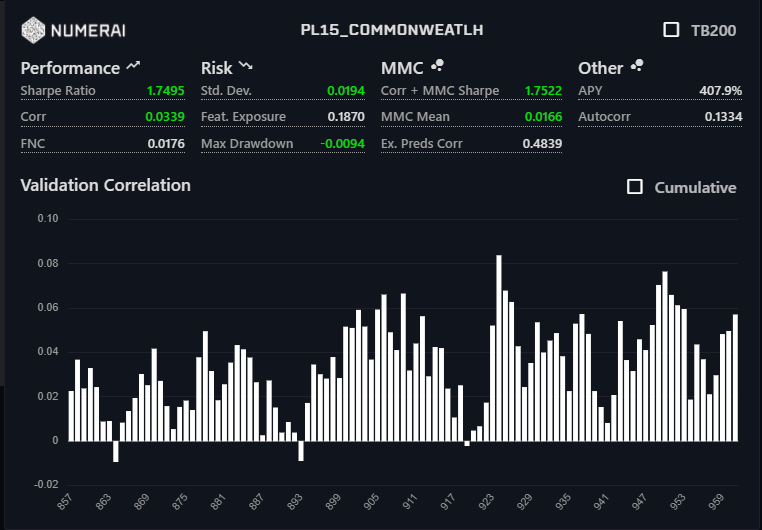

Validation without any connection to the training process.

Feature exposure still quite good but lack of data suffered it a bit.

That would be the other form of data leakage that I see, it seems like you have tweaked your model specifically for your out-of-sample performance. While the model never explicitly sees that data, it is built with performance on that very specific sample in mind. I would go through the same exercise of building my model to fit the last 100 eras of training set the best, then use that model once on the validation set. This will probably be a closer estimate of your live performance and give you an idea of the impact of data-mining bias in your current process.

I am also curious if you have done any feature naturalization as you have very skewed corr on different eras.