Hi all.

I came to this thread in search of explanations. I’m not making accusations, I’m offering theories and challenging those that are either unsupported by evidence or that don’t seem to jibe with my readings of the data (or both).

Please try to remember that you Numerai vetrans were here once too. Not everyone participating in the tournament has been exposed to the same information about the tournament. Apparently some of you have been in direct contact with members of the staff, others like me have just started their involvement and know only what’s on the website.

First of all: I’d love to watch it! It sounds like it has all the answers. But you still haven’t shared it yet.

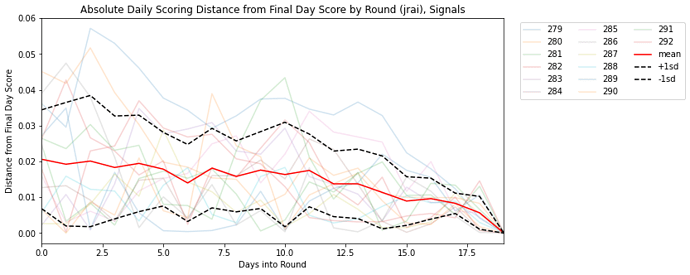

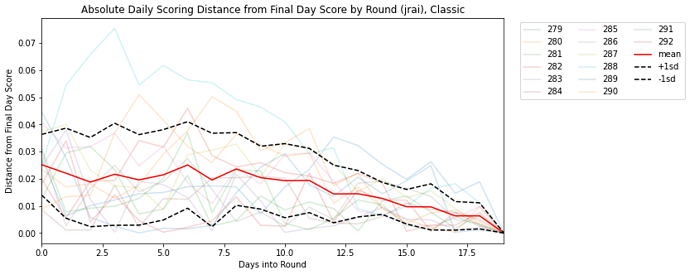

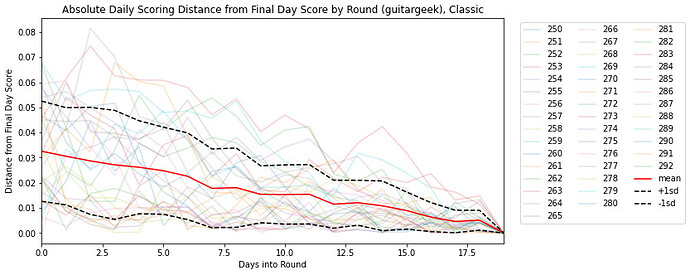

Second: Hiding things is a core part of the Numerai concept. They strip all identifying materials from the data set to keep the competition more data science and less finance. I understand and accept this. They’ve documented it clearly on the website. But the daily scores are the opposite of hidden: they’re front and center for all the world to see, but they are only thinly documented (hence this thread).

Third: Conspiracy? Why did you bring that word up? Just because I’m not ready to accept your claims without evidence? Again, please try and remember that I haven’t had the same access to the primary sources that you’ve had and - like you - just want to hear answers from a source I can trust. Just because you feel that you understand things with great certainty doesn’t automatically make you a trustworthy source, since you are not a Numerai employee nor have (yet) offered any evidence beyond hearsay in support of your claims.

If you post that video that you keep referencing, I would greatly appreciate it. Then it will be available for anyone who has the same questions in the future and who want to hear it straight from Richard’s mouth. This is less ideal than an update to the official Numerai docs but still a big step in the right direction.

Looking ahead, I hope you plan to cite (and make available) your sources in your upcoming FAQ. This is historically how knowledge is built, and for good reason.

Thanks for sharing this. I didn’t know that there was a complete lack of feedback in an earlier incarnation of the tournament. Daily feedback would certainly be an improvement on that, for sure, even if the early days are not indicative of the final score.

This is pretty funny. The thing I’ve asked for most in my posts is a trusted source of information (like Richard) to chime in on this topic. Please don’t misinterpret my skepticism of unfounded claims made by Numerai outsiders as a lack of trust in Numerai’s leadership. There is no connection between the two, which is of course the source of my skepticism in the first place.