I hope we can have these metrics’ importance w.r.t. TC in every resolved round to see the dynamics. I am curious about the new TC stacking options, how will it affect mmc importance as the meta-model may become more different from the example model. I am still not sure if i should optimize for mmc directly by the example prediction.

I am very excited for these changes. Even if there is a slight dip in NMR value, this is a big step forward for the hedge fund which we all want and need to stick around for a long time :). Also from a profitability stand point, I appreciate that the barrier to entry is rising and that it is strategy related (feature engineering and modeling to optimize TC) not necessarily hardware dependent (looking at you supermassive dataset).

I am wondering though if the team has done any testing and experimentation with the validation set and optimizing TC? Was the above analysis done inclusive of validation eras?

I have mixed feelings about the current validation set for Corr and MMC and so wondering if there are any related changes or improvements down the pipe in this regard?

I think I answered this here: Question on TC: Is it True Contribution or something else? - #3 by mdo

no doubt in the end this will happen

@mdo Assume someone stake 100 NMR , what’s the differences between stake on (0 x CORR+2xTC) and (1xCORR+2xTC) ?

Is this new metric TC robust towards p / (1-p) type of vulnerability?

Leaderboard Bonus Exploit Uncovered - Tournament - Numerai Forum

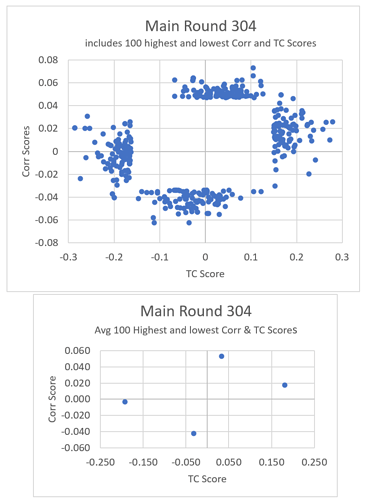

this makes sense. Outliers like Round 304 Alfaprism_41 corr and mmc in 99 pct and TC in 25pct needs some unpacking.

It is symmetrical (1-p will get exactly opposite TC), and there is no bonus anymore, so shouldn’t be an issue.

Is there any preprocessing for user’s predictions before SWMModel in the production system? In MMC, user’s predictions are converted to uniform distribution but I am wondering the behavior for TC.

Especially whether only rank matters or magnitude matters for TC calculation, and this information is useful for us to understand the TC behavior.

For those who are commenting that they will likely withdraw from the competition due to the change as their currently optimized model (CORR/MMC) is not suitable for TC, I think that is their intention. Because those models are earning rewards without helping TC/hedge fund performance.

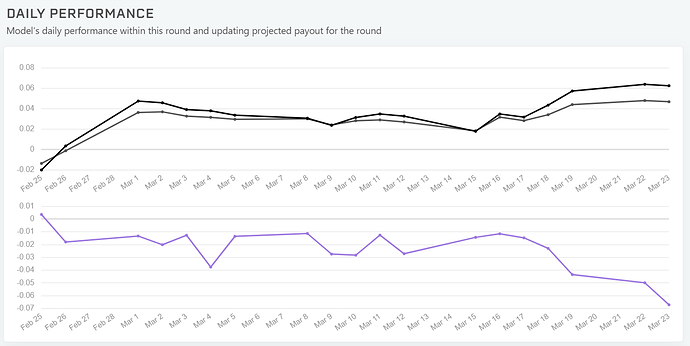

I am fortunately not in that group somehow. I recently checked my model and it would have made 1% more per week if I could stake on 2xTC instead of 2xMMC. It does however increase volatility as others have mentioned, but perhaps for a different reason. I currently have a 2.1 Sharpe with Corr+2xMMC but it will drop to 1.3 with Corr+2xTC. This is mainly due to the lack of correlation between CORR and MMC in my submissions, but a 40% correlation between CORR and TC. Ideally, having some validation diagnostics to historically backtest TC will be helpful if that can be provided in the diagnostic tool.

Will a train_example_preds.parquet file, at some point, be provided? If we are interested in modeling an optimization for Exposurer Dissimilarity (mentioned in the original post from @mdo )? For those willing/wanting to build a new tran/validation set via mixing eras from each, it becomes impossible to draw from an existing example_pred.

Would a relatively decent equivalent be to use the example model provided to produce predictions for the training set? Or are the example_preds in the validation file specially chosen by the Numerai team for a particular reason?

lol well I will stay skeptical initially and see what will happen with TC rankings and daily/weekly scores for example, I can imagine we will see some huge swings when people start experimenting. At the moment I have a model ranked #11 for TC, yet I have absolutely no clue why this one would be up there so high in the ranking

Time to get staking! but before you do, tell me about your model…

lol nothing fancy here, its an ensemble of different regression algo’s with the small feature selection, and also only 1/4 of the v2 dataset (1 out of 4 era’s to avoid overfitting).

you know, i tried something like that with ElasticNet, Ridge, Lasso and Lars. Nil Nada Niet.

I hope you’re gonna sell those predictions on numerbay, fame and fortune beckons… if you’re in the market for an agent…

Well… be my guest I would say  : https://numerbay.ai/product/numerai-predictions/bigcreeper_4

: https://numerbay.ai/product/numerai-predictions/bigcreeper_4

Maybe this has been already answered somewhere else and I missed that, but How is that the documentation and diagnostic tool still mention mmc instead of tc? Is there a way to know the tc for models not submitted ?

@mdo wouldn’t removing the turnover constraint unfairly benefit ‘faster’ signals, that you actually wouldn’t be able to trade due to the high turnover? In Signals especially it would be easy to create fast high turnover models. If you can’t actually trade the signal though (due to high turnover, which isn’t constrained) then the signal wouldn’t be ‘contributing’ to the portfolio returns, right? Maybe I’m misunderstanding ‘turnover constraint’ in this context?

Doesn’t the turnover constraint just refer to limitations from stuff they are currently holding (i.e. they can’t turnover their whole portfolio every time they trade) and not some inherent “speed” of certain types of signals? (The removal of this constraint is what I was referring to in the other thread btw when I said TC isn’t computed against the actual Numerai portfolio.) Sure, in signals (or classic), somebody can switch up their model constantly to something else, but it has to be something else good (if they want to benefit from it). And consider that (at the moment anyway) there are two different funds with two different portfolios using two different optimizers but only one metamodel.

The definition of a model “contributing” doesn’t need to be strictly limited to trades the model “recommended” (via its rankings) that actually happened, but to creating via the metamodel a varied menu of good potential trades that the (real full) optimizer can choose from to actually trade that also fit it with the real-world turnover limitations from their current position. (And again, now you’ve got two optimizers doing this for two funds.) In other words, good choices on the menu (that time shows would have worked out well) should be rewarded if they were actually chosen or not. TC is arbitrary enough (from user perspective even if it isn’t really). Reward/punishment differences for what are actually equivalent choices of trades (in terms of “surviving the optimizer” and in ultimate real-world performance) based on timing because of what Numerai happens to be actually holding this week introduces a truly arbitrary/random element (because it is all a black box to us) that would mess up the feedback mechanism. (Because “good” trades would be essentially randomly denied reward or even punished.)

While this would probably yield a more stable metric, I suspect that it might be too closely related to CORR, which is what Numerai does not want. Instead they want predictions that can be still correlated to the target even after filtering out some of the entries. Otherwise everyone will just optimize for the easiest to retrieve signal that works most of the time. But what happens if exactly this signal is filtered out after all constraints? Then you have something that is random noise or even systematically anti correlated with the target.

For example consider the optimizer decides that all rows with feature_foo_bar not equal to 0.5 cannot be traded because risk is too high. Lets say this feature is most of the time the most correlated one to the target, so most of the users models will like this feature very much and depend heavily on it (Similar to the risky features). But now that this feature is filtered on the remaining predictions are probably trash.

I guess this is the main reason why FNC & exposure related metrics are good proxies for TC.

But I agree that we should not be punished for the black box that comes after our submission. When I think about it, the list of proxy variables are probably the best metrics Numerai could offer to stake on, if TC is what Numerai wants. These variables basically say: If these are high, your ranking recommendations are likely to be surviving the constraint black box and are still yielding profit. This would also decouple the user stakes from the noise of the market, which is something that we as users cannot do something about and should actually be the task of the risk optimizer.