I was also confused by the way neutralization was done. I could see that what we are getting at is simply running a linear regression of predictions on features then residualizing that out from predictions. That was the idea in my mind, but as you mentioned, the code itself uses a pseudo-inverse and not the normal inverse of variance of predictors formula.

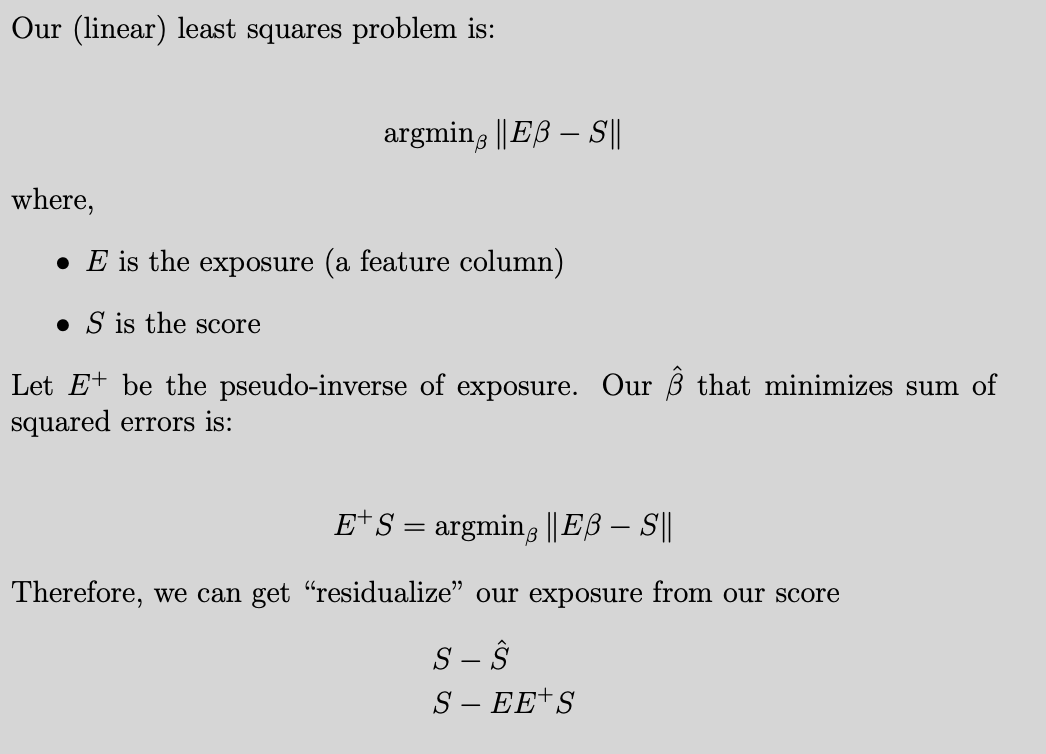

Well it turns out a pseudo-inverse is the solution to the least squares problem. I have a little explainer below. I assume an L-2 norm, of course if we change the norm then we change how we “neutralize”, which could be a interesting avenue: