In response to the OP’s question (Is TC slowing down your research and experimentation?), I would have to say no. It just changed the direction a bit. Well, more than a bit ![]() Plus I (finally) had to learn enough Python to do the dailies and interface it to MatLab, my usual programming tool. BTW, thanks to the Numerai team (see below) for actually making that easy!!! Works like a charm, and it only took a couple of days once I set out to do it.

Plus I (finally) had to learn enough Python to do the dailies and interface it to MatLab, my usual programming tool. BTW, thanks to the Numerai team (see below) for actually making that easy!!! Works like a charm, and it only took a couple of days once I set out to do it.

I find it a really interesting project, and I don’t think it’s one Numerai can really provide training targets for But I do think that individuals can research it themselves in relation to their own analysis techniques.

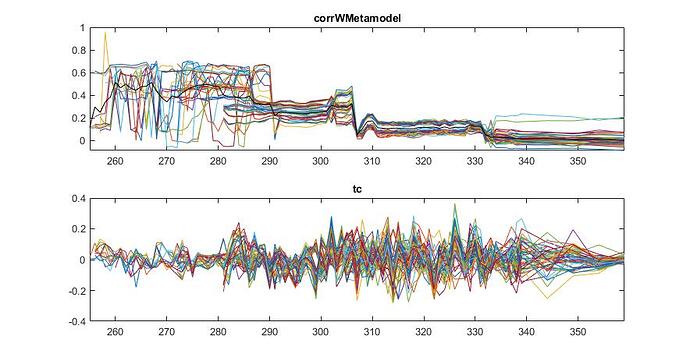

I think an “trick” is to do what one can to move as far away from linear solutions techniques in general when developing a model to solve for corr. Just as an example, here’s a plot of my results comparing the corrWMetaModel variable with TC:

The models from 255 to around 285 were quite linear, around then I started introducing Gaussian Mixture approaches, and then from 312 on a series of different GMs based on variations of genetic algorithms. From the second plot, these different techniques certainly affect the variance in the TC; the next step is to look for consistency.

ETA: with reference to whom to thank for the Python tools, I think that my thanks actually should go to @uuazed and other contributors for making the package and doing the upkeep.