First off, I am more of a hobbyist when it comes to AI, so I could be wrong. However, it seems to me that if I train a neural net on a small sample size and take the best weights that it is similar to assuming that I should only use the method in school where I was on a “winning streak” with X number of questions on my tests. It would also seem that the smaller the sample size, the shorter term focus of the model and vice versa. The larger the sample size, the longer term focus of the model. Its just a theory for me, but like I said this is more of a hobby. I haven’t done all the formal study that someone else may have. Any and all enlightenment is welcome. Thanks.

Yeah exactly. Your post actually reminded me of one of Andrew Ng’s videos in his Deep Learning specialization:

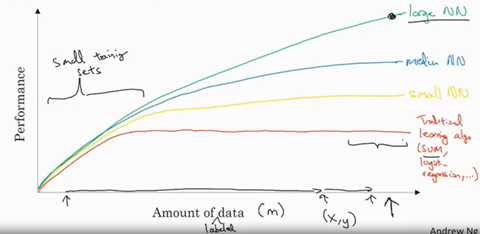

As a TLDR this graph basically sums up the whole video

So the more data you have, typically the more performant and bigger you can make your neural net. We’re definitely in the small neural net category in this competition, so make sure to utilize it well.

Notice the point about traditional learning algorithms, they tend to work better with less data. Seems like people are getting some good success with training on a small number of eras using traditional methods like xgboost, for instance look at BOR0 ranked number 2 at the time of writing:

Even more important than having a large training set is having a large validation set. If you judge your model’s performance over a small number of eras, you’re not going to get a good idea of how your model really performs in the long run. That’s why personally I use cross-validation for the vast majority of my models now.