There was feedback in the private messages which forced me to clarify my statements.

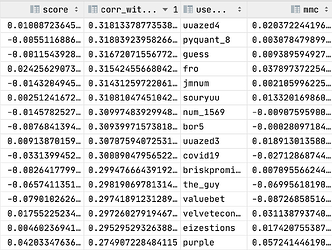

I believe that we cannot use any live data results as any justification of the theory discussed above. My point is that correlation with MetaModel is a completely wrong metric for model uniqueness. There are 4 example models in the attached code (based on the sdmlm’s code). First one is MM with -0.055 CORR performance . Second one is a true unique model with -0.036 CORR performance, 41% corr with MM, -0.066 MMC and -0.03 MMC-CORR value. The third one is a noisy “unique” model with -0.03 CORR performance, 43% corr with MM, -0.15 MMC and -0.12 MMC-CORR value. By the term noisy model I mean here that correlation was reduced mostly using part of predictions which does not correlate with target values. And the last, fourth model is highly correlated which is basically a noisy “unique” model with less artificial shuffles of predictions which does not correlate with target values. It has the same -0.03 CORR, 65% corr with MM, -0.075 MMC and -0.044 MMC-CORR value. So, truly unique model have the highest MMC and MMC-CORR metrics despite the worst raw CORR performance. Highest correlation model has better MMC and MMC-CORR metrics than noisy unique model. Both results provide me with the feeling that current MMC implementation is totally fine. And this “So rehoboam is rewarded much more solely for being more like the meta model, which makes no sense as usual, especially since purple has a much better model in this round. All of this data seems to follow this trend except for a couple of outliers that suggest some other kind of oddities in this data.” can be just explained by most of “unique” models in live data are fulfilled with a noise except “the couple of outliers” which are truly unique and useful models.

The only thing which makes me feel slightly less confident in my example is using 0.3 value in low_meta_corr_model array. So, any comments about that are appreciated.

Regards,

Mark

import numpy as np

import pandas

import scipy.stats

def spearmanr(target, pred):

return np.corrcoef(

target,

pred.rank(pct=True, method="first")

)[0, 1]

def neutralize_series(series, by, proportion=1.0):

scores = series.values.reshape(-1, 1)

exposures = by.values.reshape(-1, 1)

# this line makes series neutral to a constant column so that it's centered and for sure gets corr 0 with exposures

exposures = np.hstack(

(exposures, np.array([np.mean(series)] * len(exposures)).reshape(-1, 1)))

correction = proportion * (exposures.dot(

np.linalg.lstsq(exposures, scores)[0]))

corrected_scores = scores - correction

neutralized = pandas.Series(corrected_scores.ravel(), index=series.index)

return neutralized

def _normalize_unif(df):

X = (df.rank(method="first") - 0.5) / len(df)

return scipy.stats.uniform.ppf(X)

target = pandas.Series([0, 0, 0, 0,

0.25, 0.25, 0.25, 0.25,

0.5, 0.5, 0.5, 0.5,

0.75, 0.75, 0.75, 0.75,

1, 1, 1, 1])

meta_model = pandas.Series([1, 0, 0.25,

1, 0.5, 0.75,

0.5, 0.5, 1,

0.75, 0.75, 0,

0, 0.25, 0.25,

1, 0, 0.25, 0.5, 0.75])

high_meta_corr_model = pandas.Series([1, 0, 0.25,

1, 0.75, 0.5,

0.5, 0.5, 0.75,

0.75, 1, 0,

0, 0.25, 0.25,

0.75, 0.5, 1.0, 0.0, 0.25])

low_meta_corr_model = pandas.Series([0.3, 0.5, 0.75,

1, 0.5, 0.75,

0, 0, 1,

0.75, 0.75, 0,

0.25, 0.25, 0.5,

1, 1, 0, 0.25, 0.25])

low_meta_corr_model_noise = pandas.Series([0.25, 0, 1,

1, 0.75, 0.5,

0.5, 0.5, 0.75,

0.75, 1, 0,

0, 0.25, 0.25,

0.75, 0.5, 1.0, 0.0, 0.25])

# Meta model has raw corr with target of -5.5% (burning period)

meta_model_raw_perf = spearmanr(target, meta_model)

print(f"Meta_model performance: {meta_model_raw_perf}")

high_meta_corr_raw_perf = spearmanr(target, high_meta_corr_model)

high_meta_corr = spearmanr(meta_model, high_meta_corr_model)

print(f"High_meta_corr model cross-corr: {spearmanr(meta_model, high_meta_corr_model)}")

print(f"High_meta_corr model performance: {high_meta_corr_raw_perf}")

low_meta_corr_raw_perf = spearmanr(target, low_meta_corr_model)

low_meta_corr = spearmanr(meta_model, low_meta_corr_model)

print(f"Low_meta_corr model cross-corr: {spearmanr(meta_model, low_meta_corr_model)}")

print(f"Low_meta_corr model performance: {low_meta_corr_raw_perf}")

low_meta_corr_raw_perf_noise = spearmanr(target, low_meta_corr_model_noise)

low_meta_corr_noise = spearmanr(meta_model, low_meta_corr_model_noise)

print(f"Low_meta_corr_noise model cross-corr: {spearmanr(meta_model, low_meta_corr_model_noise)}")

print(f"Low_meta_corr_noise model performance: {low_meta_corr_raw_perf_noise}")

# MMC Computation

# All series are already uniform

# Neutralize (using forum post code for neutralization)

neutralized_high_corr = neutralize_series(high_meta_corr_model, meta_model, proportion=1.0)

neutralized_low_corr = neutralize_series(low_meta_corr_model, meta_model, proportion=1.0)

neutralized_low_corr_noise = neutralize_series(low_meta_corr_model_noise, meta_model, proportion=1.0)

# Compute MMC

mmc_high_corr = np.cov(target,

neutralized_high_corr)[0,1]/(0.29**2)

mmc_low_corr = np.cov(target,

neutralized_low_corr)[0,1]/(0.29**2)

mmc_low_corr_noise = np.cov(target,

neutralized_low_corr_noise)[0,1]/(0.29**2)

print(f"MMC for non-original model: {mmc_high_corr}")

print(f"MMC for original model: {mmc_low_corr}")

print(f"MMC for original noise model: {mmc_low_corr_noise}")

print(f"MMC-CORR for non-original model: {mmc_high_corr-high_meta_corr_raw_perf}")

print(f"MMC-CORR for original model: {mmc_low_corr-low_meta_corr_raw_perf}")

print(f"MMC-CORR for original noise model: {mmc_low_corr_noise-low_meta_corr_raw_perf_noise}")

Meta_model performance: -0.05518254055364692

High_meta_corr model cross-corr: 0.6560590932489134

High_meta_corr model performance: -0.030656966974248294

Low_meta_corr model cross-corr: 0.4169347508497767

Low_meta_corr model performance: -0.03678836036909795

Low_meta_corr_noise model cross-corr: 0.4353289310343258

Low_meta_corr_noise model performance: -0.030656966974248308

MMC for non-original model: -0.07529413605357009

MMC for original model: -0.06688466111771676

MMC for original noise model: -0.15449965579823513

MMC-CORR for non-original model: -0.044637169079321797

MMC-CORR for original model: -0.030096300748618812

MMC-CORR for original noise model: -0.12384268882398683

![]()