Leaderboard Bonus Exploit Uncovered

As many of you are aware, we plan to remove the leaderboard bonus going forward. The primary reason I’ve been pushing for this discontinuation is because it is susceptible to certain attacks by bad-actors. We can’t afford to have the most profitable piece of the tournament be exploitable - as profitability of a system increases, so does the effort people will put into gaming it. I have been suspicious of a couple of accounts for some time now, but only today was able to prove it.

P, 1-P

There is an attack that some users are aware of called the p, 1-p attack. This attack is specifically designed to take advantage of reward-structure-asymmetries. The premise is this:

- Submit one high variance model.

- Make another model that is the exact opposite of the first model.

- Stake the exact same amount on both models.

- Due to the high variance, one will likely do very very poorly, while the other does extremely well.

- However, payouts are now guaranteed to be exactly opposite for both models round to round.

- The model which does well will have a high position on the leaderboard and receive the leaderboard bonus.

- Since the round-to-round payouts will cancel out, there is exactly 0 risk from burns. There remains only potential profit from leaderboard bonus.

The Exploit

Madmin, numer.ai/madmin, has been at the top of the staked leaderboard for some 2 months now.

Some of our analytics have shown the model itself is not particularly interesting, just some linear combination of a few features. This leads to a very high variance model that is able to take high risk and has the potential to blow every other user away in the proper regime. We might be okay with this as a standalone model, as the downside risk is high as well.

What I discovered though is another account called Madmax, numer.ai/madmax.

This model has been consistently outside of the top 300.

I immediately decided to check if it’s a p, 1-p attack as the names might imply. Though close to opposite, the models are not actually inverses of one another (their scores do not sum to 0). However, they both started staking exactly 40 NMR on exactly the same date. While this is suspicious, it’s not enough evidence to take action against an exploit at this point.

As I am working on an MMC payouts proposal though, I’m realizing that perhaps I should find some hard evidence to make my case for discontinuing the leaderboard bonus.

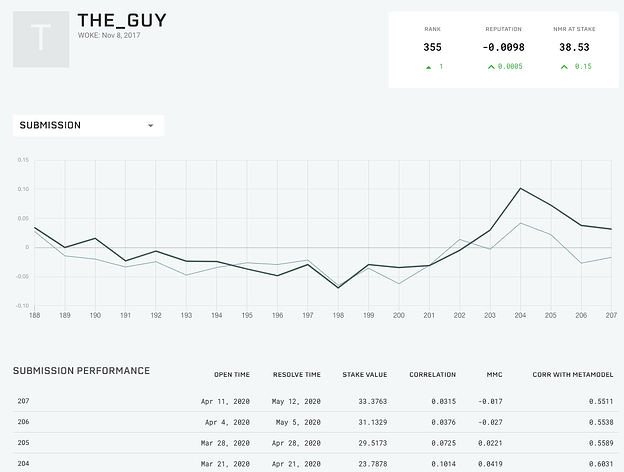

So through some investigation, I was able to uncover a third account. The_Guy, numer.ai/the_guy.

This user sits outside of the top 300 as well. They started staking exactly 40 NMR on exactly the same date as Madmax and Madmin. The account was created exactly 1 day before Madmax. And for any given round, if you sum the scores of Madmax, Madmin, and The_Guy, the result is always 0.

So like with the p, 1-p attack, this set of models achieves 0 risk, but actually has an even higher chance of one of them achieving high reputation because they are submitting 3 equally spaced high-variance models at different ends of the spectrum, rather than 2.

These models began with 40 NMR each in October 2019.

To date, the models have a combined 222 NMR.

17 for Madmax, 38 for The_Guy, and 167 for Madmin.

This is a clear exploitation of the payout system for over 100 NMR and 85% returns in less than 6 months.

The Punishment

From docs.numer.ai:

Let me first say, that as a “crypto company”, we are sympathetic towards the idea that code is law, and if someone exploits it, it is the code writer’s fault. It is a goal of the company to decentralize to the point where we can live by this.

On the other hand, as the docs say for now, “We reserve the right to refund your stake and void all earnings and burns if we believe that you are actively abusing or exploiting the payout rules.” We feel like protecting the integrity of the tournament for legitimate users is quite obviously more critical than adhering to this crypto idealism at present.

In this case, we believe the following actions are just:

- Revert all payouts. This means they will receive all of their originally staked 120 NMR, but all payouts and burns will be undone.

- Ban all three accounts from the tournament going forward.

We will be discontinuing the leaderboard bonus in 100 days, as planned, removing this asymmetry and disabling the attack vector. There is little risk of exploitation in the coming 100 days, as new stakes will not take effect by then.

And of course, we will be monitoring the leaderboard and banning without remorse any users suspected of such an attack.

Update:

Edit:

We have reconsidered the punishment terms since the writing of this post. Now the punishment is this:

- Ineligible for the leaderboard and leaderboard bonus for its remaining 100 days.

- No ban going forward

- Keeps all NMR earned up to this point.

There are a few key points contributing to this.

- No rebalancing. If the user was truly trying to achieve zero risk, they would need to rebalance their NMR across the models every few weeks. The fact that no rebalancing occurred points to it being a viable strategy and genuine “skin in the game” on Madmin, with belief that it was going to continue to be the best model going forward.

- We don’t want users to have to think about potential punishments. They should be able to play the

game given the incentives we’ve given them. That’s why leaderboard bonus is going away. We see it as our responsibility to make not-exploitable payout systems. We think this type of modeling could be viable even without leaderboard bonus, and we don’t want to inhibit this type of creative thinking and hedging as we go forward. - Already received payments should be final. We don’t want to set a precedent where we take away NMR that’s already been earned and in the user’s account.

Ultimately it is our responsibility to make a tournament in which users are incentivized to work towards goals in the intended way. User’s should not have the burden of thinking about what is legal and what is not, and have to think about how the Numerai team will view their behavior.

We still reserve the right to return payouts, as described in this original post, but we will only do it in the most extreme, unquestionable circumstances. This circumstance does not meet that criteria.