I have made my own feature set that is a subset of the v4.3 feature set. Here is the link:

The goal of this experiment was to create a feature set with the fewest features while also maximizing CORR and MMC. I wanted to create a feature set smaller than the Numerai medium set contained in features.json but also better. The most practical application of this feature set is it allows users to train models using less RAM, which is a common roadblock for new users.

The methods I used to select the features were pretty simple:

- Built-in feature importances of lightgbm/xgboost.

- SHAP feature importances.

- Brute-force evaluation vs. the validation set.

(I will say at the start here: yes, this feature set is “overfit” to the validation set. I did not train on validation but I did evaluate against validation many times. Does this feature set still have value? That is for you to decide.)

Overall, I am pleased with the results. Here are the cumulative CORR20v2 results using the “example model” trained on eras 1-561 (downsampled to every 4th era) on target_cyrus_v4_20:

model = LGBMRegressor(n_estimators=2000, max_depth=5, learning_rate=0.01, colsample_bytree=0.1, num_leaves=2**5+1)

(I just noticed num_leaves should be 2**5-1 instead of 2**5+1 to match the example model, a minor difference).

Evaluated vs ALL validation eras 575-1092:

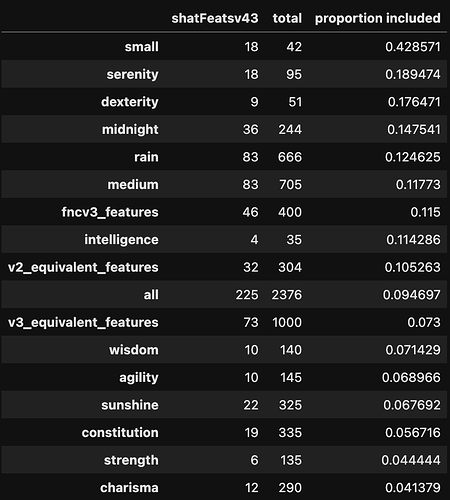

Suprisingly, my compact feature set of 225 features gets higher CORR than the full v4.3 feature set! In fact, all the metrics are better, including sharpe and even feature exposure (barely).

Here are the diagnostics of each feature set:

My feature set (225)

All v4.3 features (2,376)

Medium v4.3 features (705)

Small v4.3 features (42)

Will these features continue to beat the full feature set? Who knows, only time will tell. If you forced me to choose to stake on one, I would probably still choose the full feature set, but it will interesting to see how they perform going forward. Good luck!