Hi, Numerai Community!

I share my model approach and its insight, because of changing phases of signals.

My best performance model is habakan_x, and achieved LB top of 1year return(habakan, top of 1year return model has almost submitted prediction of habakan_x).

But monitoring mmc and IC, I don’t think this model is good for TC.

So I think sharing is no-problem for me and good for the community.

This content was prepared after a discussion with katsu-san. (Thanks @katsu1110 for your advice and discussion!)

Summary

- habakan_x add only the following pipelines to kaggle starter notebook by katsu(model is katsu1110_edelgard)

- statisticaly denoising of data

- binning

- comparing live performance between habakan_x and katsu1110_edelgard

- Both of IC was correlated, but Corr is lower

- Neutralization is influenced by denoising and binning

- Analysis of Corr and IC for each model

- habakan_x is a lower correlation between Corr and IC than katsu1110_edelgard

- habakan_x is pretty low TC, and katsu1110_edelgard is high

- Both of IC was correlated, but Corr is lower

About My model: habakan_x

It’s jnot need to explan detail about my model. Because my model(habakan_x) is based of signals starter notebook published by katsu(model is katu1110_edelgard).

And habakan_x is added only pipeline to katu1110_edelgard.

- Denoising data using basic statistical outlier detection

- binning (num of bin is 9: no particular reason)

This approach is not bad for signals task handling noisy data.

As a result, this model is good performance than my model added unique feature.

I want to comparing katsu1110_edelgard, so feature, classifier and hyperparmeter is same.

Comparing habakan_x and katsu1110_edelgard

Analysis of comparing both models (same feature and classifier) are interesting insights.

Data for analysis can collect by live perfomane of models.

The following is each metrics of statistics.

| Corr Mean | Corr Sharpe | MMC Mean | IC Mean | |

|---|---|---|---|---|

| habakan_x | 0.0247 | 1.1160 | 0.0177 | -0.0090 |

| katsu1110_edelgard | 0.0152 | 0.5704 | 0.0105 | -0.0178 |

This metrics can conclude that corr of habakan_x is better than katsu1110_edelgard, but it is more interesting to focus IC.

Analysis of IC

The figure1 is both of IC transition.(Round 289 ~ 307)

Figure1: IC transition habakan_x (top) katsu1110_edelgard (bottom)

In terms of qualitative, transition of IC is very similar.

Also, figure2 is mapping both of metrics(IC, Corr) and correlation analysis.

Figure2: habakan_x and katsu1110_edelgard metrics Mapping Corr(Left), IC(Right)

R2 of IC is over 0.89, but R2 of Corr is 0.69.

IC result is make sense because mean correlation both of validation prediction is 0.89.

Considering the result, neutralization of signals may be influenced by even denoising or binning proccessing.

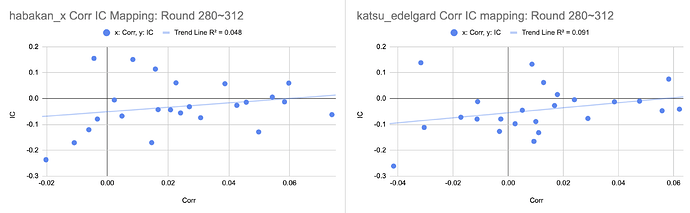

Relashionship of IC and Corr for each models

Above experiment was analysis of relashionships of models.

Next experiment is analysis Corr and IC (same model).

Figure3: Corr and IC Mapping habakan_x(Left), katsu1110_edelgard(Right)

R2 of IC and Corr in katsu1110_edelgard is 0.323, in habakan_x is 0.065.

It is difficult to conclude using only this result, because these R2 are depended on latent variables those are inclusion rate of alpha and neutralization factor.

But, it is very strange that there is a difference by only denoising or binning.

Analysis of TC

Monitoring TC, there is a difference rank TC.

habakan_x: 2713

katsu_edelgard: 234

Figure4: TC transition habakan_x (top) katsu1110_edelgard (bottom)

Cumulative of TC seems to be reverse trend.

I have not analysis deeply, TC is also change by denoising or binning.

Honestly, I’m not very serious this result, because I thought this model is “not uniqueness but good perfomance”.

I felt good for valid information result rather than😂

I think It is good that TC is based on Numerai performance.

But, It is also important to feedback the result of signals modeling.

Is above of analysis and result make sense to Numerai Competitor and supporter?

I hope this thread is a chance to share ideas of signals for communiy.

Thank you!