Hello together,

recently I played around with the new v4 dataset and tried to create a new ranking model.

During experimentation, I noticed that ranking the rows within an era is relatively “easy”: If the model is trained on lets say half of the data from eraXYZ and then tested on the other half of the same era, which it has not seen during training, it is possible to get (obviously?) really high ranking correlations, sometimes more than 0.3.

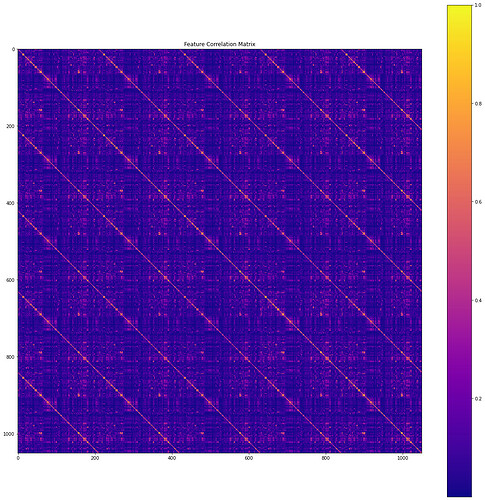

I also noticed that said model which was trained on a specific era performs also really well on some other eras, while on some eras, it performs even worse than guessing, as if the sorting mechanics were completely reversed. This is not a really surprising result, but I tried to get a better feel for that, so I made a correlation matrix of the era dependencies to see what is going on.

What you can see here is something like: When the model has positive correlation on eraX, then it has the color coded correlation on eraY, where blue are correlations > 0 and red correlations < 0:

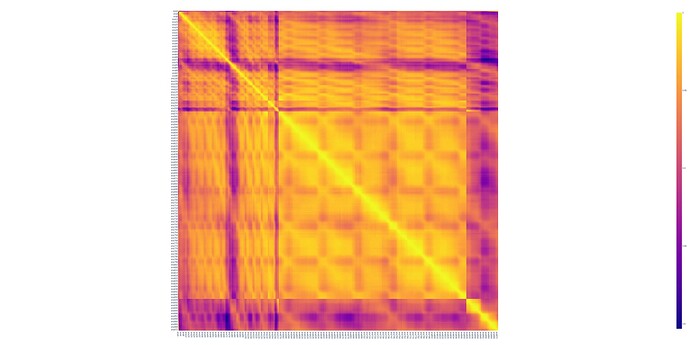

Things are more interesting if the columns are sorted against a specific era, like here, where I sorted it on the 99th used era:

If you look closely, apart from the row that is perfectly sorted, there are a few other rows that show clear “ranking mechanic similarity”, while others seem to be completely reversed.

What I did next was sorting the rows by the strength of this correlation dependency, (correlation of correlation), to see how many other eras are sorted in the same way, as these eras seem to be closely related. This is the one with the most similar eras:

As another example, here is another one sorted against the era with the least similarity to others:

You can think of the latter era as being more “unique” than the first one.

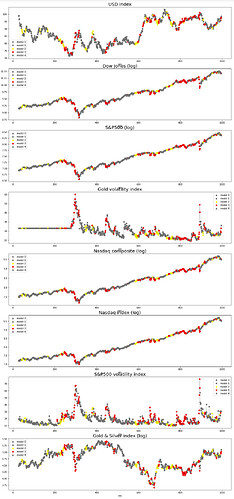

I tried to feed the output of a convolutional neural net that convolutes the entire data set of one era into the ranker model to somehow “identify”, which sorting scheme to use. But in the end, my model really struggles to learn the appropriate sorting scheme.

It somehow also fits the observation that a lot of models keep performing really well for a certain period of time only to sink down in the tournament to be never seen again, so my guess is that most models are more or less “lucky” to perform well on those eras in the training set that are similar to the actual live data era.

What do you think about it? Do you have suggestions about how to incorporate the era similarity into an actual model?