I am thnking about submission automation in cloud.

I’m considering moving my submission code to Azure Functions Azure Functions Overview | Microsoft Learn. The cost is basically $0, I just haven’t gotten around to it yet.

Does it have enough computational resources?

Similar service like GCP cloud functions has limatation for memory which is not suitable for training, I think.

Not for training, like not even close. Azure functions are limited too ~1.5GB of memory, which should be enough for most models to predict a single era. I think Google Cloud and AWS offer equivalent, but I don’t know what their limitations are.

Don’t you update trained model with updated dataset?

I have a lot of older models that I’m afraid to retrain.

I have published an article describing “How I automated my Numerai weekly submission pipeline for free, using Azure functions and python”.

I think you will find it useful!

Thank you very much for the information! I absolutely check it.

I use Deepnote. I’m able to submit daily predictions using their notebook scheduler feature.

Thats a thing?

But now the submission changed to email triggered, how the schedule will work ?

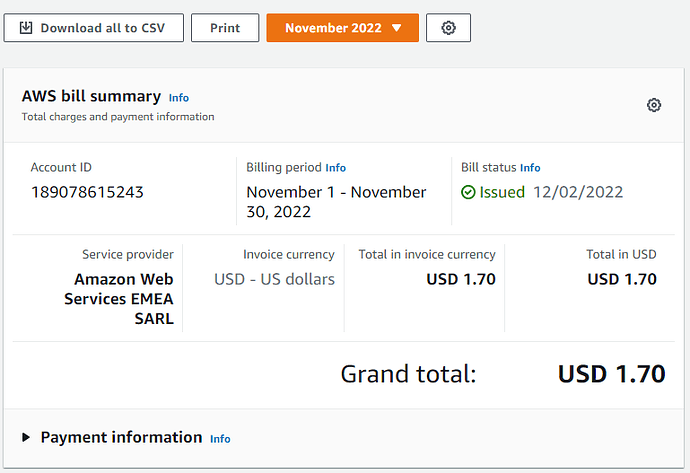

My submission automation cost it around 0.6 €/Month on Azure. It runs on a container instance that loads the models from a cloud storage, predicts and stores the results on the same cloud storage until the next round. It runs very fast since it only predicts using live data, so no training is performed.It takes the container about 90 seconds to run my 12 models.

My whole cloud infrastructure consist in the container instance, the cloud storage, one http trigger for the submissions, one http trigger for numerbay sales and two scheduled triggers, one for daily rounds and one for weekend rounds.

The only small detail is that my container image is hosted on a free private repository outside Azure, so that cost it is not accounted for. Actually, that image repository would be the most expensive resource, costing about 4.2€ per month. Although I would not be surprised if there are less expensive alternatives out there.

Edit: I forgot to mention that this represents the cost of daily and weekly predictions. Since this thread is relatively old, maybe I should remark that. I am bad at predictions, but been smoothly predicting daily since day 1 of daily predictions ![]()

![]()

I am using Kaggle for training and for submissions, because they provide for free notebooks with 4 CPU cores and 30GB RAM. Their notebook scheduling capability is not usable for numerai submissions, so I have created workaround solution with (always ![]() ) free Oracle Cloud compute node, running Kaggle public API and

) free Oracle Cloud compute node, running Kaggle public API and cron trigerring my numerai notebooks at announced round openning times. Inside of my notebooks I wait for actual round openning.

To sum it up: I remain at big fat zero automation costs ![]()

thanks for the sharing. any idea to go further, to get kaggle notebook be trigger by an external API call ? like web hooks, or something else ?

@svendaj hi, i just had a thought to include your round readiness checking script in my notebook. and still leverage deepnote to run the notebook at every evening 9pm and check the round open status every 10mins. I think i will resolve the daily submission now. Again. thanks for the shasing.

just tried script: napi.check_round_open() in deepnote.

And realize that deepnote gives following error: AttributeError: ‘NumerAPI’ object has no attribute ‘check_round_open’

Same code run in colab is working fine.

Any one encounter this issue before in deepnote ?

Sorry for later reply, so far I was working on running my notebook swarm in Kaggle. Today I have published Kaggle notebook which can create and control complex pipeline of other notebooks.

This was needed for my Saturday night numerai fever, when I was manually running 18 notebooks. Starting with weekly data update, immediately followed by 9 notebooks and 3 sub-pipelines with models to be retrained on weekly data. When this was ready (usually Sunday morning) I launched final stacking notebook.

Now I am able to put everything to one master notebook and launch it with cron from cloud automatically ![]()

Webhooks will be my next step, but not sure when I will have some time to have a look at it.

thanks for the sharing - “one ring rule them all”

Would you mind enlightening on how you got the notebook to make submissions on Deepnote? What I really mean is getting the auto-submission to work. Using the Numerai submission API gives really funny errors

I have had exactly the same problem only this is in VSC on my computer. This must be something to do with the api. If you manage to solve the problem, please let me know.