This is an analysis that I did already some time ago, but this time we have more data, rounds 300 to 512…

Note: the correlation used in these plots is corr20 field available in the roundDetails.modelData API. It is not clear if that is the new corrV2 or the old one. I would love to compare the two but they are currently not available: there are plenty of corr* fields, but only corr20 and corj60 are populated. It would be possible to retrieve both correlations (old and the new v2) via v3UserProfile API. Here there is an example on how to use that API. However it is too slow to download data via v3UserProfile, so I won’t do that.

TC vs CORR

Here we see the basic relationship between TC and CORR (left plot) and to highlight the results we also group CORR by bin (positive corr → bins 1,2,3,4,5 and negative corr → bins -1,-2,-3,-4,-5) and display the mean TC for each bin (right plot).

While there is definitely some positive correlation between the values (the plot shows a positive regression slope), there are ways too many positive CORR values with negative TC and way too many negative CORR values with positive TC. A user would need hundreds of models to be able to take advantage of this small correlation without being affected by the noise.

Since there are good rounds where the average CORR is positive and bad rounds with negative average CORR, it is also interesting to compare TC and CORR relative to the round: that is we want to see if top (or bottom) CORR values matches top (or bottom) TC values for that round, independently of their absolute values. To do so we can plot the zscore of TC and CORR computed by round (zscore(metrics) = (metrics - metricsRoundMean) / metricsRoundStdDev).

Unfortunately this plot tells us more or less the same story about TCvsCORR.

TC vs FncV3

Some plots but for FncV3. Similarly to CORR there is a positive correlation between TC and FncV3 but it is vert noisy.

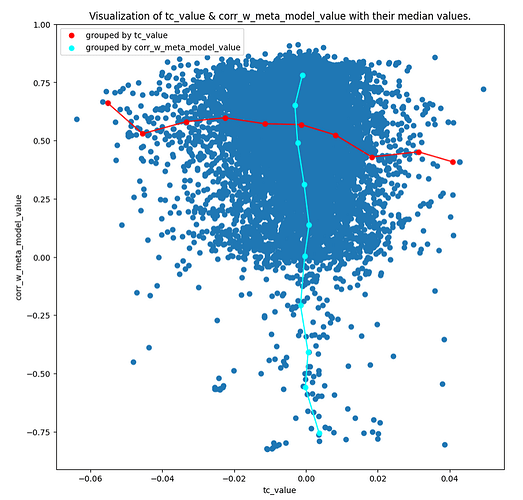

TC vs CorrWMetaModel

Nothing interesting to report on CorrWMetaModel. Being uncorrelated to the Meta Model doesn’t give automatically a positive TC and vice versa. We’ll see later if the combination of CORR and CorrWMetaModel shows something different.

TC vs CORR by FncV3

Let’s see how TCvsCORR relationship varies across FncV3 values. We split the model FncV3 values into bins (positive FncV3 → bins 1,2,3,4,5 and negative FncV3 → bins -1,-2,-3,-4,-5) and we plot TCvsCORR for each FncV3 bin.

Interesting enough, higher/lower FncV3 values (bins -5 and 5) result in a stronger correlation between TC and CORR.

However it looks different if we plot the same data using the zscore of TC and CORR.

TC vs CORR by CorrWMetaModel

Let’s see how TCvsCORR relationship varies in relationship to a model correlation with the MetaModel. We split the model CorrWMetaModel values into bins (positive CorrWMetaModel → bins 1,2,3,4,5 and negative CorrWMetaModel → bins -1,-2,-3,-4,-5) and we plot TCvsCORR for each bin.

It seems that models with low correlation with the MetaModel (bins -1 and 1) have a slightly stronger TCvsCORR relationship, even if we plot the same data using the zscore of TC and CORR.

TC vs CORR by Round

The last thing I wanted to check is how TCvsCORR relationship varies over time and you can see the variance is very high and even inversely correlated at some points in time. By the way, we can see that the average CorrWMetaModel of all models is decreasing over time.

I also wanted to verify if TCvsCORR relationship depends on how easy/difficult a round was (positive or negative mean round payout/corr/tc). The naive idea I wanted to double-check is: “when the round average correlation is negative then TC rewards models differently”.